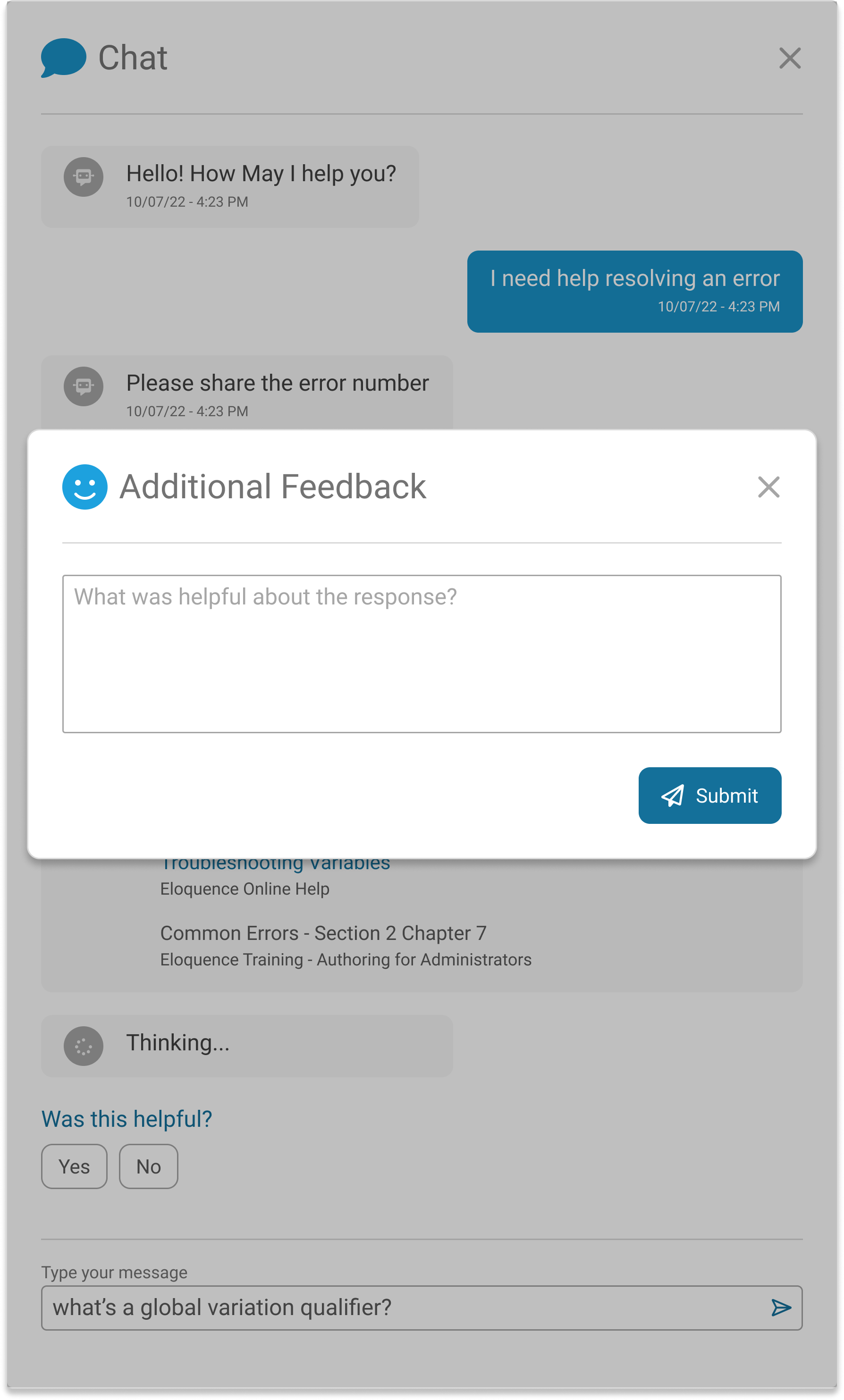

An AI-based chatbot to guide new and experienced users through complex features, bridging gaps in documentation and simplifying navigation so business users can achieve their goals more quickly and confidently

My Role

Conducted user interviews

Led conference workshops

Analyzed analytics data

Designed responsive screens

Focusing Our Design Efforts

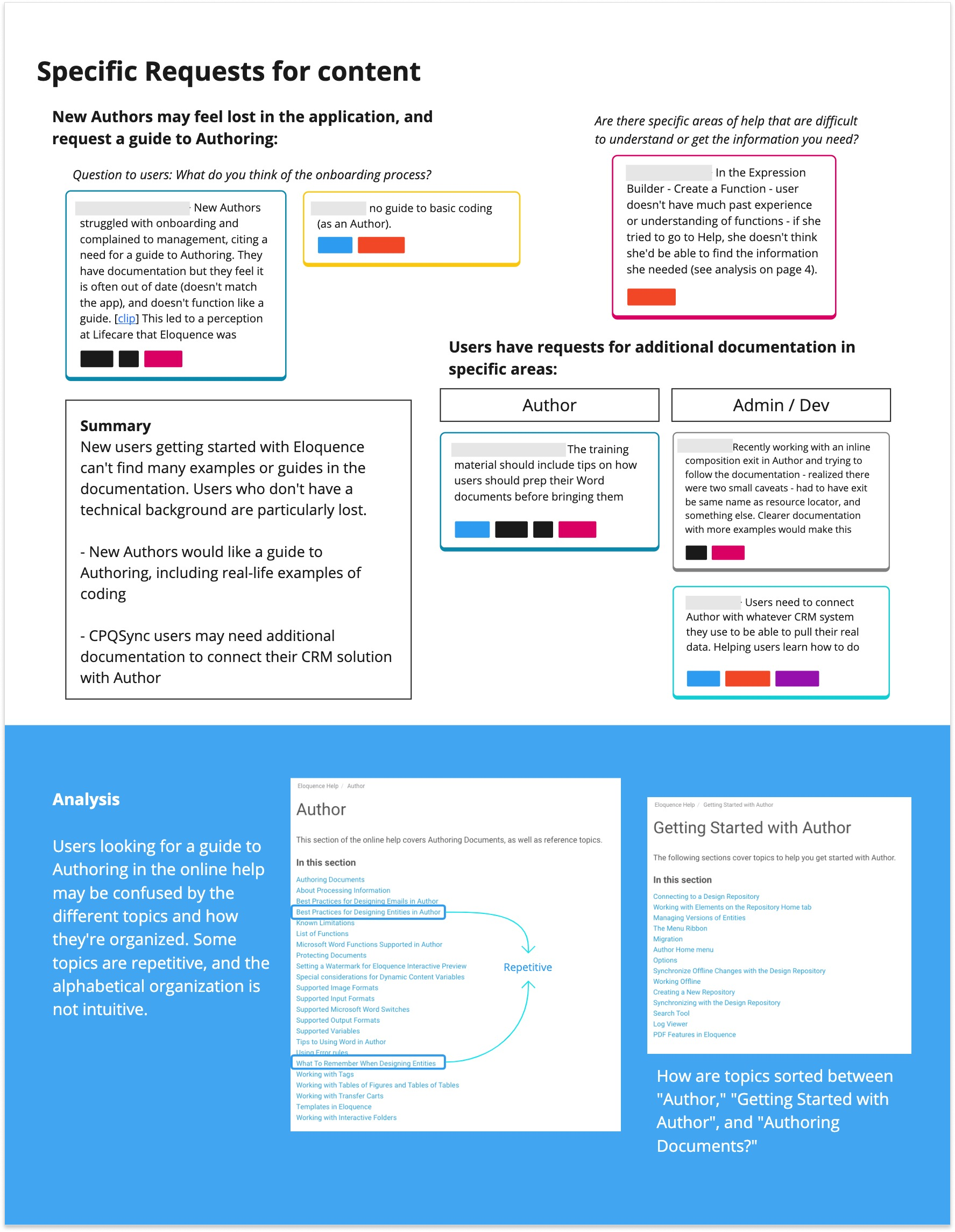

A big challenge our customers face is onboarding new business users, due to the complexity of the application and documentation materials. We wanted to explore ways to improve this experience, helping our customers reduce training time and lowering support costs.

Persona

Authors are business users who design communication templates for print, email, and SMS. These templates can be complex, with multiple reusable building blocks, variables, and logic.

Identifying User Pain Points

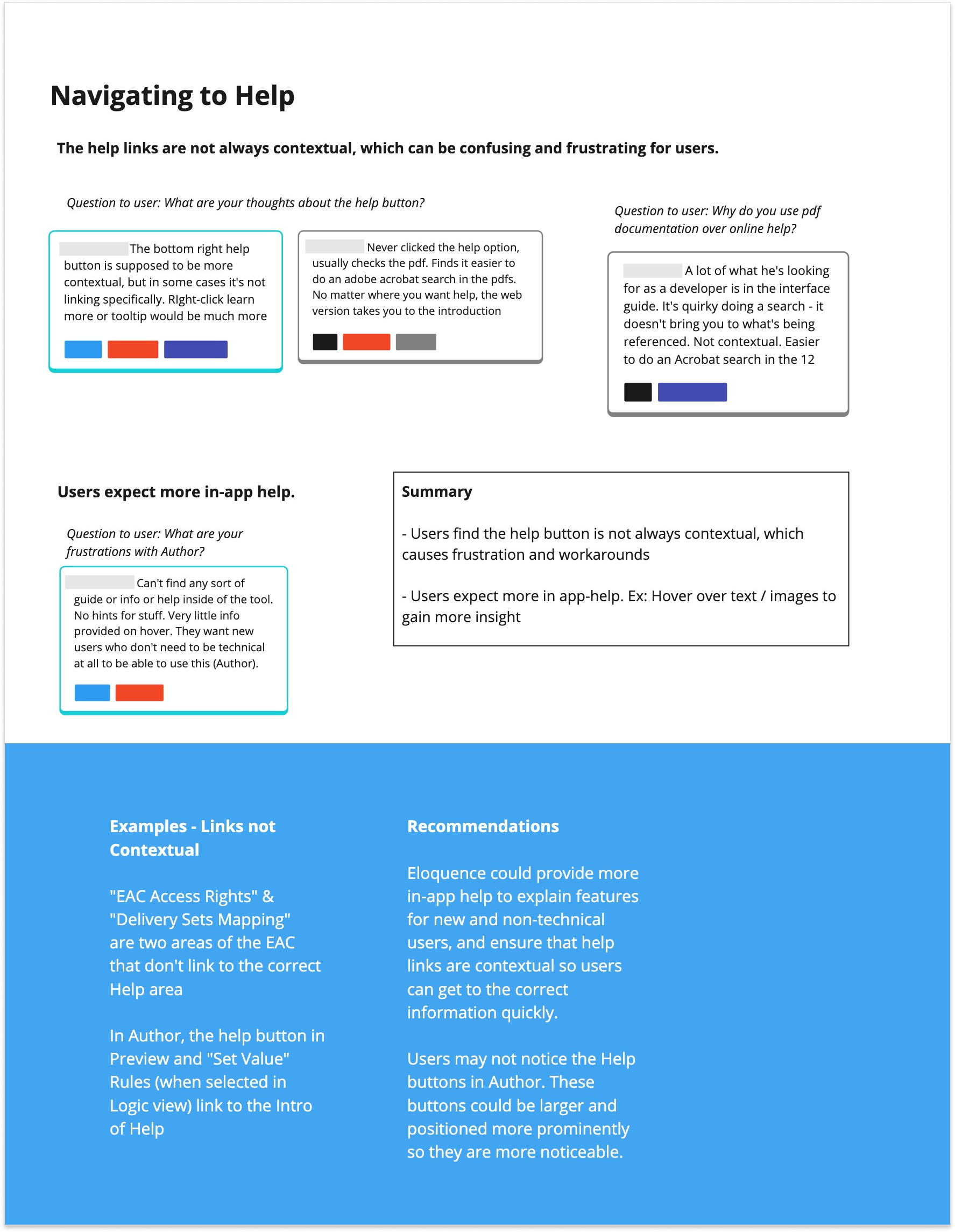

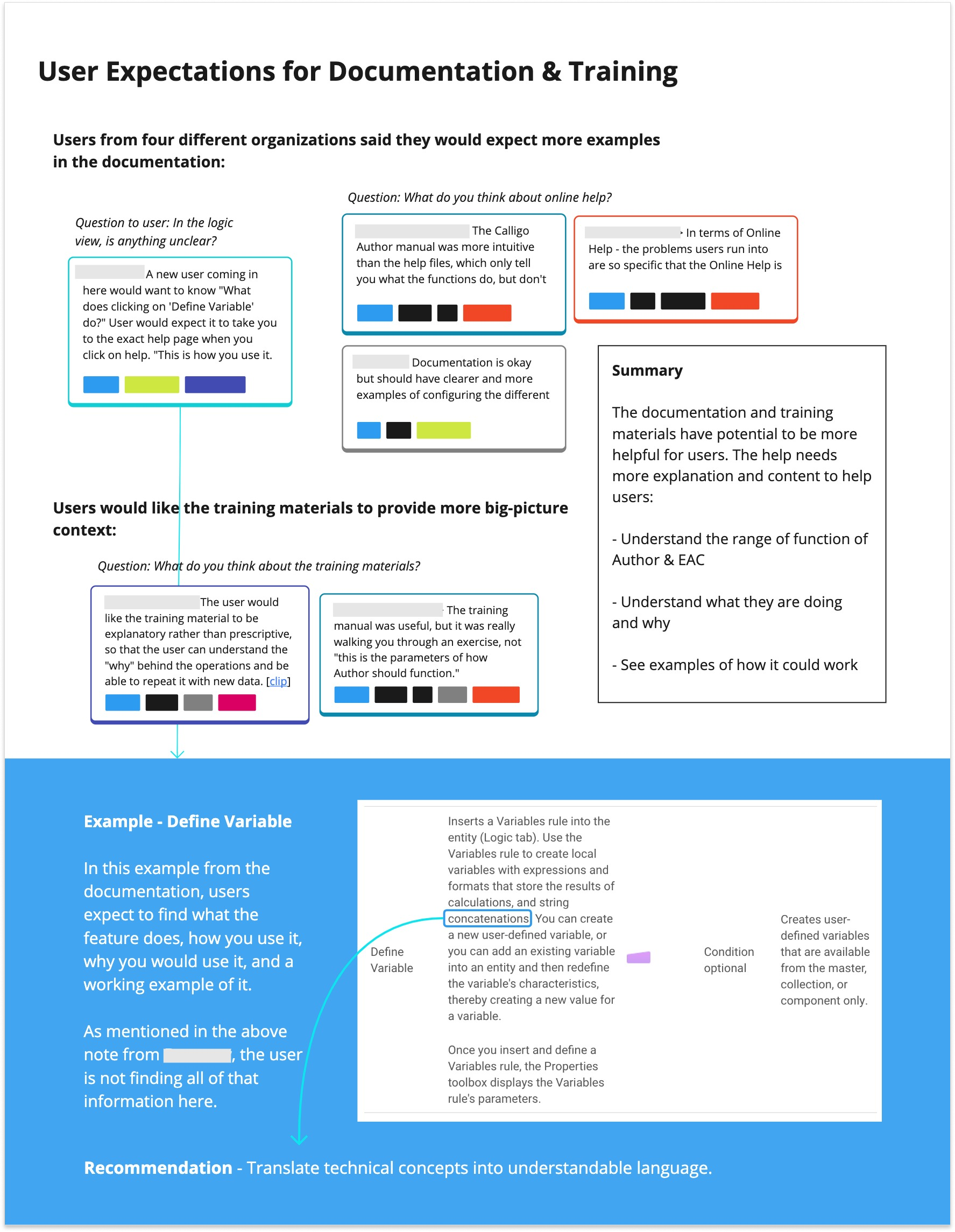

During user interviews, 7 Authors from different organizations requested more in-app and contextual help, specific guides, and working examples.

Problem Statement

How might we ensure the more complicated and technical features of the application are understandable for business users?

Strategy and Chatbot Design

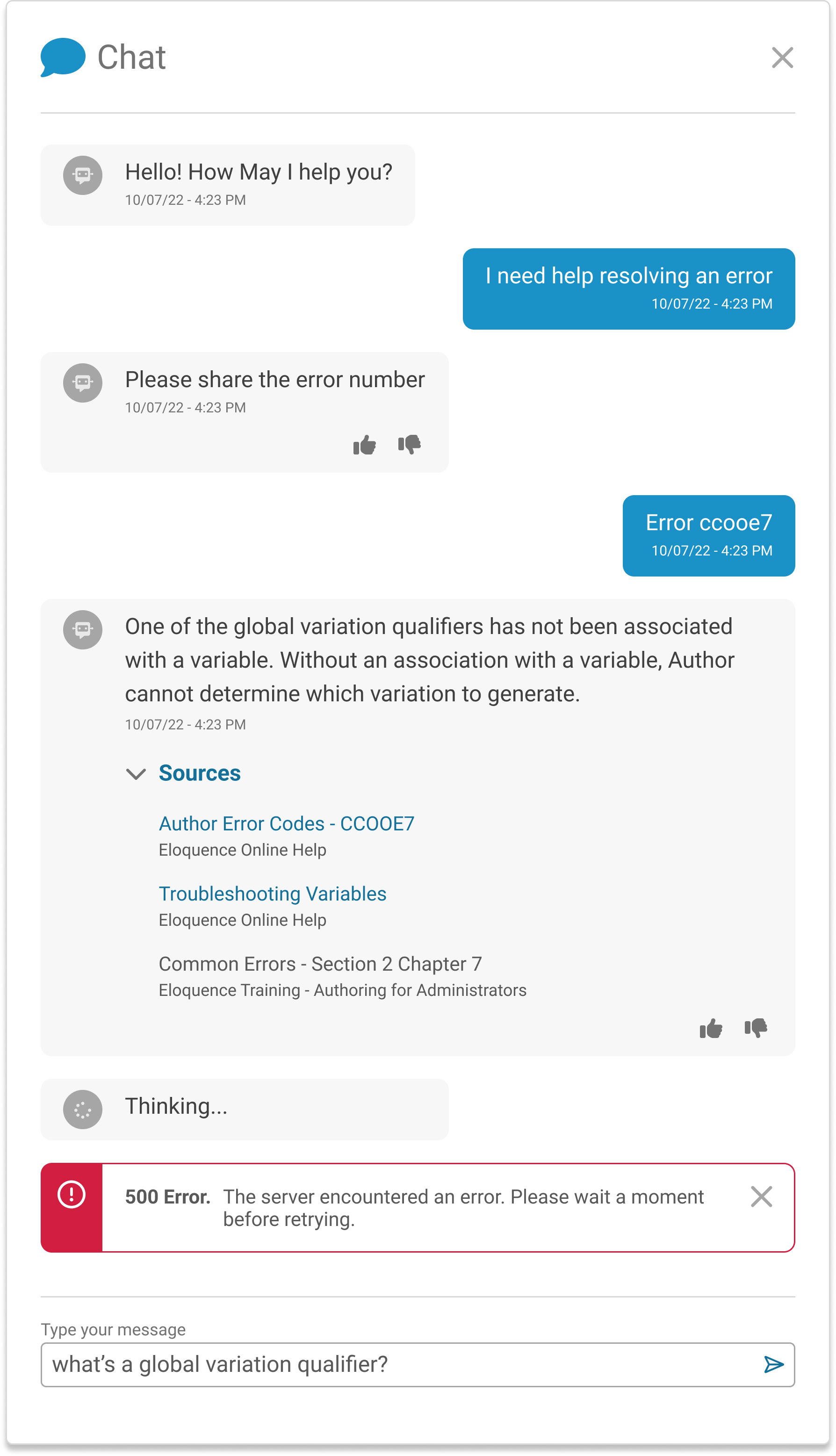

Onboarding new business users was slowed by the complexity of the application and its large help system. With full documentation overhaul out of reach, we explored an AI-based chatbot to provide fast, intuitive guidance and support for technical features.

Our engineers developed the Author Chatbot, which was fine-tuned on documentation and synthetic conversations. Tested internally before user trials, the chatbot delivers instant answers, helping users learn the system more quickly, reducing training time, and errors.

UX Analysis

We tested the prototype with customers at our annual user conference. Even in this early stage, users’ interactions provided valuable insights, though it was clear the chatbot would require significant time and resources to improve its accuracy.

33 users who were familiar with Author interacted with the chatbot. They asked the bot 136 questions.

Insights

Analyzing users’ interactions with the bot revealed common questions and user reactions. Even with a small sample, we identified patterns that informed recommendations for improving both documentation and the app.

Reflection

Our research showed that a documentation chatbot could add significant value and potentially evolve into a full AI assistant. Developing it would require substantial investment and thorough testing to ensure reliable guidance. Prototyping offered key insights for UX and engineering, but the business ultimately decided the required resources outweighed the benefits at this time.